I've been working with the Google Cloud Platform at work for a little over a month now, and I'm getting comfortable with it. I haven't done an exact price comparison with EC2/AWS, but apparently its a bit cheaper. Based on my last 6 weeks of experience, I'm pretty happy with the pricing so far. Static IP addresses are free, and their dashboard tells you where you can make hosting optimizations to save money which I think is great. There's lots of good documentation, and the libraries for adding software are full featured and work well. While GCP not be as full-featured as AWS, I find their menus and dashboards easier and more intuitive to navigate than AWS.

Hiccups I ran into:

- I can't dynamically add cpu or memory. I've got to stop the instance and restart it. Same with disk space. I'd also like to be able to make some of my disks smaller, but I can't seem to do that either without some kind of reboot.

- If an instance has a secondary SSD drive, it seems that there are issues with discovering it on a 'clone' or a restart after a memory or CPU change. I have to log in through the back end (fortunately GCP provides an interface for this) and comment out the reference to the secondary drive in the /etc/fstab file to get it going again. This seems buggy.

I recently spent a couple of days configuring Google Stackdriver Monitoring this week, and after a

couple of frustrations with not being sure how to get started, I installed an agent on a server and I was off to the races. Installing agents is definitely the way to get going. I found that it was easiest to create Uptime Monitors and associated Notifications at the instance level, on a server by server basis. Doing it this way allowed me to group the Uptime Monitors to the instance, something I couldn't do when I created the Uptime Monitors outside of the instance. It was simple to monitor external dependency servers that we don't own, but need running for our testing. I integrated my Notifications with HipChat in a snap. I also installed plugins for monitoring Postgres and MySql - these worked great so long as I had the user/role/permissions set correctly. I'm super impressed with Stackdriver Monitoring, and will probably use it even if we have to switch over to host with AWS.

Our biggest roadblock with the Google Cloud Platform currently is they don't have a datacentre in Canada. That could be a big selling feature for many of my clients, and because they don't have it, we may have to consider other options (like AWS/EC2, which is spinning up a new data centre in Montreal towards the end of 2016...) Privacy matters! Hope you're listening Google...

Saturday, August 20, 2016

Thursday, April 7, 2016

EC2 and the AWS (Amazon Web Services) Free Tier - My First Experience

|

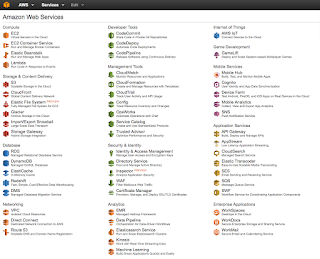

| The Amazon Web Services Management Console |

About 6 weeks ago I had purchased Amazon Web Services IN ACTION, written by Andreas and Michael Wittig and published by Manning. It gave me a great primer on setting up my AWS account, my Billing Alert, and my first couple of VM's. I leveraged that experience, crossed my fingers, spun up 18 VM's for my students, and hoped I wouldn't get charged a mint for having them running 24/7 for a few days. It was a Friday around 1pm when I created them and gave them to my students to use. Imagine my surprise when I checked my billing page in AWS on Monday and discovered they had only charged me $2.81!

On the last day of class, I split the students up into two teams and gave them large web projects to do, and spun up a 'T1 Micro' for each team. I gave them some scripts they could run to create some swap memory if they needed it. They ran those scripts right away, and within an hour (with 9 people uploading files and content into those systems continuously) those T1 Micros CPUs pinned. So I quickly imaged them over lunch and spun up an 'M3 Large' VMs (2 CPU's, 7.5 GiB memory) for each team and threw their images on. I ran into one issue spinning up the new team VM's - I had to spin down the original Team VM's first before I could start the new ones because there is a limit/quota of 20 VMs on the 'free-tier' in AWS. Aside from that and the changed IP addresses, the transition was seamless. I was a happy camper and now that the students had responsive VM's, so were they.

My total bill for the week - $38! A colleague pointed out that I probably could have added auto-scaling to those team VM's and increase the CPU and memory in place, without losing the current IP's. He's right, I probably could have, but I didn't have the experience and didn't want to waste class time (and potentially lose the student's work) by trying something I didn't know how to do. All in all, I was very impressed with my first run of EC2 in AWS. It was very reasonable, responsive, and easy to use. I'd definitely do it again.

Monday, March 28, 2016

The Perils and Pitfalls of OpenSource Software

Open-Source Software is the backbone of most of the internet. Seriously. For more than a decade, small business websites and main-stream web applications have used open-source software to develop their solutions. Web projects (and frankly, most software projects in general) that don't have a dependency of some kind on an open-source component are the RARE exception to the rule.

Should we be concerned about this? I think so. Here's why:

Should we be concerned about this? I think so. Here's why:

- Coders aren't implementing open-source code properly. In my experience, any open-source code dependencies should be referenced locally. Many coders fail to do this and reference external code libraries in their code. What happens when that external 'point of origin' has a DNS issue, or is hit with a DDOS attack, or is just taken down? Your site can break.

Case in point - check out this article on msn.ca 'How One Programmer Broker the Internet' In a nutshell, one open-source programmer got frustrated with a company over trademark name issue. This developer's open-source project had the same name as a messaging app from a company in Canada. He ended up retaliating by removing his project from the web. It turned out his project had been leveraged by millions of coders the world over for their websites, and once his code was removed, their websites displayed this error:

npm ERR! 404 'left-pad' is not in the npm registry

I believe if developers had downloaded the NPM javascript libraries and referenced them locally on their servers, they wouldn't have run into this issue (as they'd have a local copy of the open-source code).

Another case in point - I worked on a project a number of years ago that had dependencies on jakarta.org - an open source site at the time that was hosting a bunch of libraries and schemas for large open-source projects like Struts and Spring. Some of the code in those projects had linked references to schemas (rules) that pointed to http://jakarta.org. Unfortunately we didn't think of changing those links and one day jakarta.org went down.... and with it went our web site, because it couldn't reference those simple schemas off of jakarta.org. After everything recovered we quickly downloaded those schemas (and any other external references we found) and referenced them locally. - Security Do you know what is in that open-source system you're using? Over 60 million web sites have been developed on an open-source content management system called WordPress. Because it is open source, everyone can see the code - all the code - that it is built with. This could potentially allow hackers to see weaknesses in the system. However, WordPress has pretty strict code review and auditing in place to ensure that this kind of thing doesn't happen. They also patch any issues that are found quickly, and release those patches to everyone. The question then becomes: Does your website administrator patch your CMS?

Another issue I ran into related to security was with a different Content Management System. I discovered an undocumented 'back-door' buried in the configuration that gave anyone who knew about it administrative access to the system (allowing them to log into the CMS as an Administrator and giving them the power to delete the entire site if they new what they were doing. Some time later, I found out that some developers who had used this CMS weren't aware of that back door and left it open. I informed them about it, and they quickly (and nervously) slammed it shut. Get familiar with the code you are implementing! - Code Bloat (importing a bunch of libraries for one simple bit of functionality) Sometimes developers will download a large open-source library to take advantage of a sub-set of functionality to help save time. Unfortunately, this can lead to code bloat - your application is running slow because its loading up a monster library to take advantage of a small piece of functionality.

- Support (or the lack of it) Developers need to be discerning when they decide to use an open-source library. There are vast numbers of open-source projects out there, but one needs to be wise about which one to use. Some simple guidelines to choosing an open-source project are:

- How many downloads (implementations) does it have? The more, the better, because that means its popular and likely reviewed more.

- Is there good support for it? In other words, if you run into issues or errors trying to use it, will it be easy to find a solution for your issue in forums or from the creators?

- Is it well documented? If the documentation is thorough, or if there have been published books written about the project, you're likely in good hands.

- Is it easy to implement? You don't want to waste your time trying to get your new open-source implementation up and working. The facilities and resources are out there for project owners to provide either the documentation or VM snapshots (or what have you) to make setting up your own implementation quick and easy.

- How long has it been around? Developers should wait to implement open-source projects with a short history. Bleeding-edge isn't always the cutting edge. Wait for projects to gain a critical mass in the industry before implementing them if you can help it.

Friday, March 18, 2016

Random Thoughts About the DevOps Movement in IT - Part 2

The Phoenix Project (image of the book on the left) was recommended to me over a year ago as a good read about DevOps. It emphasizes the benefits of Dev Ops to management, shareholders, and a company. Impressions I had of the book were:

Finding and Cracking That Golden Egg

Hurdles I've encountered enroute to the DevOps 'golden egg' are:

- Its a great, fun read, however it skims over the difficulties and trials of the actual automation of the technical systems and software development process - where the rubber really hits the road. It seems to practically romanticize the idea of automation in IT a bit - like DevOps is the goose that will lay your golden egg. Unfortunately, there's significantly more work to get that golden egg in my experience.

- It also glosses over how to get the Security team on board with what DevOps wants to do. In many companies, the Security team holds the trump card and if they decide to change all your certs from 128 bit encryption to 2048 bit encryption (don't laugh, I've seen it happen and we had to regenerate certs for all applicable servers in all envs). Their wish is your command unless you can convince someone influential that that kind of encryption is overkill in a non-prod environment.

Finding and Cracking That Golden Egg

Hurdles I've encountered enroute to the DevOps 'golden egg' are:

- Silo-ed Application Projects.

- Lack of consistent naming conventions for deployment artifacts and build tags/versions is majorly detrimental to the automation process.

- Lack of understanding of the dependencies between application projects (API contracts, or library dependencies). If you don't understand your dependencies between applications, they may not compile corectly, or they may not communicate properly with each other.

- Lack of consistent development methodology and culture between teams. If you have one team that doesn't get behind the new culture, and they insist on manually deploying and 'tweaking' their code/artifacts after deployment, they risk the entire release. Getting everyone on board with culture is challenging.

- Overusing a tool. When you have a hammer (a good DevOps tool), everything is a nail. Generally, many of the DevOps tools out there all have their niche. You could potentially use Chef, Puppet, or Ansible to do all your provisioning and automation. Is that the best solution though? I'm inclined to say no. How much hacking and tweaking are you having to do to get all your automation to work with that one tool? Use the tools for what they are best at, what they were originally made for. Many of these tools have Open Source licenses and new functionality is being added to them all the time. While it might seem that the new functionality is turning your favourite tool into the 'one tool to rule them all', you might have a big headache getting there, trying to push a square peg in a round hole.

- Lack of version control. Everything must be stored in a repository. The stuff that isn't will bite you. VM Templates, DB baselines, your automation config - it all needs to go in there.

- DB Management. All automated DB scripts should be re-runnable and stored in a repo.

- Security. How to satisfy the Security Team? How to automate certificate generation when they are requiring you to use signed certs? How to manage all those passwords in your configuration?

- Edge Cases. Any significantly sized enterprise is going to have/require environments that land outside your standard cookie cutter automation. Causes I've seen for this are:

- Billing cycles - we needed a block of environments that were flexible with moving time so QA didn't have to wait 30 days to test the next set of bills and invoices.

- Stubbing vs. Real Integration - Depending on the complexity of integration testing required, there may be many variations of how your integration is set up in various environments - what end-points are mocked vs. which ones point to some 'real' service.

- New Changes/Requirements - Perhaps new functionality requires new servers or services. This can make your support environments look different that your development environments.

- Licensing issues. When everything is automated, it can be easy to loose track of how many environments you have 'active' versus how many you are licensed for. License compliance can be a huge issue with automation - check this interesting post out 'Running Java on Docker? You're Breaking the law!'

- Downstream Dependencies This is where the Ops of DevOps comes into play. Any downstream dependencies that your automated system might have need to be monitored and understood. You can't meet your SLA with your client if your downstream dependencies can't meet that same SLA. Important systems to consider here are: LDAP, DNS, Network, your ISP, and other integration points.

- YAML files. Yes, perhaps they are more terse than storing your deployment config in XML. However, I'm at a bit of a loss to see how they are better than a well named and formatted CSV file. Sure, you can 'see' and manage a hierarchy with them. But you can do the same in a CSV file with the proper taxonomy. YAML files utilize more processing power to parse and have extraneous lines in them because their trying to manage (and give a visual representation of) the hierarchy of the properties. I've seen YAML files where these extra lines with no values account for a significant percentage of the total lines in the file, making the file more difficult to maintain and prone to fat fingers. Several major DevOps tools use these files and I really can't see a good reason why except that they were the 'new, cool thing to do.'

Wednesday, March 16, 2016

Random Thoughts About the DevOps Movement in IT - Part 1

|

| DevOps related books I have in my library currently |

Software development methodologies and practices have evolved a lot since then. It's been a challenge to keep up. I'm a pragmatic guy, and I thought one could get pretty much all the functionality needed for an automated build and deployment stack just using Ant, CruiseControl, Kickstart, VMWare, and some helpful API's. Clearly I was wrong.

Cause for Pause

Without a doubt, the DevOps movement has captured the imagination of many a software developer. Just look at all the tools out there now! Chef, Puppet, Bamboo, RunDeck, Octopus, Docker, TeamCity, Jenkins, RubiconRed, Ansible, Vagrant, Gradle, Grails, AWS... I could go on. I'm beginning to question whether or not the polarization and proliferation of these tools has been helpful. I find many companies looking to fill a Devops role are asking for resources who have experience with the specific tool stack they are using. That must make human resource managers pull their hair out. Even personally, I'm concerned about hitching my wagon to the wrong horse. If I decide to accept a position with a company who is using a Chef/Docker stack (for example), but the industry decides that Ansible/Bamboo is the holy grail, have I committed professional suicide? Probably not, but it does make one consider job opportunities carefully.

Looking Forward

This P and P (proliferation and polarization) of DevOps tools makes me wonder what the future is going to look like for the DevOps movement. Consider what Microsoft did with C#. Instead of continuing to diverge and go their own way with the replacement for VB, they created a language with a syntax that essentially brought the software development industry back together (in a way). Brilliant move. Java developers quickly ported their favourite tools/frameworks over to C# and suddenly developing with Microsoft tools was cool again. It would sure be nice if something like that happened with the DevOps tools.

Something else to consider for the future... so far in my experience with overseas, outsourced teams, they have not yet embraced the DevOps movement. As a result, they aren't as efficient or as competitive as they could be. When they do jump on the DevOps bandwagon and truly tap into it's potential, it could be a game changer for people like me....

Tools to Watch

- Perhaps Amazon is the 'new Microsoft' with its expansive and ever expanding AWS tool stack? There's definitely some momentum and smart thinking going on there. They appear to have automated provisioning all wrapped up in a bow.

- Atlassian is another company (I didn't realize they were based out of Australia) that is putting together DevOps tools that have a lot of momentum in the industry. Their niche is collaboration tools.

- Puppet and Chef both have a strong foothold in the DevOps community. Both of them are adding new features all the time, enabling them to automate the deployment and provisioning of more products and systems. Many people use Puppet and Chef in conjunction with RunDeck or Docker to get all the automation they are looking for.

Friday, March 4, 2016

Teaching an IT class (JavaScript, XML, C#, VB, CMS/PHP)

I've taught part-time at SAIT for 11 years now. I've had more than 30 different classes with 5 different courses. Recently I've been challenged by a colleague (also a part-time teacher here at SAIT) to make my courses better and more interactive. While I thought my courses were pretty interactive to begin with, his ideas have definitely pushed me further in a good way.

I've taught part-time at SAIT for 11 years now. I've had more than 30 different classes with 5 different courses. Recently I've been challenged by a colleague (also a part-time teacher here at SAIT) to make my courses better and more interactive. While I thought my courses were pretty interactive to begin with, his ideas have definitely pushed me further in a good way.All the courses I've taught have been introduction to different code languages or working with code:

Visual Basic, C#, XML, Javascript, PHP, and Content Management Systems like WordPress and Drupal. Up until recently, my courses had been - at a high level - structured as follows: I demonstrate a new concept/code idea to the class and I have them follow along. Once they are reasonably comfortable with implementing it (perhaps an exercise or two of following along like this) I give them an exercise that they have to try and do themselves. Sometimes I'll add a twist to make them think a bit. For the most part this has worked great. Its looked like this (an example with XML):

- Intro to the Language and syntax - I'll go through several power point slides that discuss:

- What does well formed XML mean?

- How do you ensure your XML is well formed?

- What are the keys/rules to well formed XML?

- I'll ask the class questions as we go along and get them to answer.

- We'll code a simple XML file together. I'll make intentional mistakes along the way and see if the class is paying attention, or just call them out and ask 'Do you think this is right?' I will also put comments in my code so the students can refer to them later. Once done, I'll give this complete exercise to the students so they have it to refer to.

- Then I'll give them an XML file that is not well-formed (completely broken) and get them to try and fix it.

- After about 15 minutes, I'll try and fix it myself in front of them, getting them to help me.

- Once that's done with my comments, I'll again put it in a shared folder for the students to grab so they can refer to it later.

- I'll give them another broken XML file and get them to fix it.

- Again, after about 15 minutes, I'll try and fix it myself in front of them, getting them to help me.

- Once that's done with my comments, I'll again put it in a shared folder for the students to grab so they can refer to it later.

- Then, next concept.... and repeat.

As I mentioned earlier, I've been challenged by a colleague to make my courses better, even more collaborative, and (hopefully) gets the students more return on their tuition investment. Here's what I'm changing and improving:

- Using a Repo. Instead of using a shared folder to give files to students and have them turn in assignments, I'm using BitBucket with SourceTree (and forcing them to use it too). This is great practice for them for the industry, and so far has worked really well. My SourceTree client does get bogged down at times (with over 30 repositories) but it hasn't been a major deal.

- Commenting. I continue putting lots of comments in my code for the class to refer to. They really like this and find it helpful when they review after class. I'll definitely keep doing this

- Error Challenge. I tend to fat-finger a bit when I'm coding in front of the class (even when I'm working off of a printed copy of a complete exercise). Sometimes these errors are hard to catch and I waste some class time while I try and figure out what I did. So instead of waste our collective time and to help them pay attention, I instituted a little game. If a student catches an error I make in my code, they get a point. Points accrue for the duration of the course. The student at the end of the course with the most points gets a free Starbuck's gift card (or something like it). This worked really well, and I'm definitely going to do it again.

- Student Journal. Student feedback is key for me. I teach in 'Fast-Track' programs where the students know that they are going to get inundated hard and fast with new concepts all the time. Ensuring I'm not bull-dozing them with new stuff is key, so having a feedback loop helps. Up to this point I've just been asking them in class 'How's the speed - am I going to fast?' But my colleague suggested getting the students to journal and having them commit that to their repo so I can see it as another feedback loop. I did this in my last course and it worked quite well. I don't have them journal every day - just once a week or so, but the feedback I got from them individually was great.

- Group Projects. Out in the industry, most of these students are going to be working on teams, so having them do exercises in class as teams seems logical. It turns out that students, for the most part, really like having group projects to work on. They can collaborate together and learn from each other. It also pushes them to compete a little which makes for an even better result. I have them create a team repo for the project(s), and this also gives them experience with some BitBucket administration (branching and merging, etc.) which also prepares them for the industry.

Sunday, January 24, 2016

IOException - Disk Quota Exceeded error - yet there's lots of disk space

I use a Mac for work. I've run into an interesting issue with making backups of client web sites and zip files with my Mac... It seems that whenever I've zipped a file and backed it up to my Mac, I get these wonderful __MACOSX folders - that's 2 underscores at the beginning of the folder name injected into the folder mix.

Restoring a site from one of those backups on a linux machine can have adverse consequences because of those 'injected' __MACOSX folders. It seems that certain flavours of linux really don't like that folder name and as a result will not write a single file to the machine any more.... at all. Instead, it throws an a 'Disk Quota Exceeded' error. You can try touching a file, you can try unzipping some other files - whatever you do, you'll get that error. The error didn't go away for me until I deleted all __MACOSX folders that got copied up to my machine in the zip file. Once I did that - it was all good.

The interesting thing about this error was I could have tons of disk space still on the machine, and yet it would still throw that error until I removed those offending __MACOSX folders.

There are various commands out there for removing these folders from zip files created on a Mac... something like this:

zip -d your-archive.zip "__MACOSX*"

Monday, January 18, 2016

Get Hired as an IT Newbie Part 2 - Soft Skills

I participated in a focus group this past week at SAIT where we discussed their 'Web Developer Fast-Track Program' and its relevance to the industry. The goal behind the meeting was how can we make the course more relevant to the changes and continual evolution of technology that is happening in the industry so students are more prepared for positions when they leave. The discussion was engaging and took some interesting turns. Here's what I took away related to Soft Skills:

I participated in a focus group this past week at SAIT where we discussed their 'Web Developer Fast-Track Program' and its relevance to the industry. The goal behind the meeting was how can we make the course more relevant to the changes and continual evolution of technology that is happening in the industry so students are more prepared for positions when they leave. The discussion was engaging and took some interesting turns. Here's what I took away related to Soft Skills:Soft Skills

Employers like student hires to be reasonably technically adept. Their technical expectations aren't in the stratosphere. However, they want their student hires to have more than just technical ability. Students should be well versed in soft skills.

Soft skills is (to some degree I'm sure) an ambiguous subject for many students. I can hear you thinking 'What do people mean when they say that??' In the context of our discussion yesterday, it meant prospective IT employers in the focus group yesterday were looking for students to have, in addition to technical skills:

- An ability to communicate well with a client - verbally, directly in front of them or over the phone. Also having the discernment to know when to send an email versus when to talk verbally. Poorly written emails are notorious for communicating the wrong things - particularly negative feelings where there were none.

- An ability to know what good work is. Does the design and function of a web site fit the purpose/company it was designed for? For example, does a sales/marketing website have lots of good calls to action? Does the site feel right? Would 'Joe's Mom' know how to navigate and use the site.

- A can-do attitude. Students will likely get the soul-crushing repetitive work that senior developers/designers don't want to do (or don't have time to do). Students should accept this and be prepared to do it with gusto. If you're in an interview and you don't have a clue about a technology they asked you about, reply: "I don't know about that, but I will know about it tomorrow" and mean it.

- An ability and desire to collaborate and work in a team setting. Its a fact of the industry - if you want to work in an agency, you're going to need to be able to work on a team and collaborate with team members.

- Students coming out of school should love learning and know how to learn things/solve problems on their own.

- Students should be able to receive constructive criticism without responding with excuses about bad instructors, poor course material, or the speed at which things were taught. You don't get much chance for excuses on the job. Employers are looking to 'break even' economically on their student resource investment within 4 months.

Saturday, January 16, 2016

Get Hired as an IT Newbie Part 1 - Have a Good Portfolio!

I participated in a focus group this past week at SAIT where we discussed their 'Web Developer Fast Track Program' and its relevance to the industry. The goal behind the meeting was how can we make the course more relevant to the changes and continual evolution of technology that is happening in the industry so students are more prepared for positions when they leave. The discussion was engaging and took some interesting turns. Here's what I took away related to portfolios:

Student Portfolios

15 years ago I had gone through a similar IT fast-track program and we had to do portfolios of our work for potential employers. I wasn't aware of a single employer who looked at my portfolio and as

15 years ago I had gone through a similar IT fast-track program and we had to do portfolios of our work for potential employers. I wasn't aware of a single employer who looked at my portfolio and as

a result I haven't placed much emphasis on it in my classes. BIG MISTAKE.

It turns out that prospective employers for SAIT students DO look at their online portfolios.... and generally weren't super impressed. Here's why:

Student Portfolios

15 years ago I had gone through a similar IT fast-track program and we had to do portfolios of our work for potential employers. I wasn't aware of a single employer who looked at my portfolio and as

15 years ago I had gone through a similar IT fast-track program and we had to do portfolios of our work for potential employers. I wasn't aware of a single employer who looked at my portfolio and as a result I haven't placed much emphasis on it in my classes. BIG MISTAKE.

It turns out that prospective employers for SAIT students DO look at their online portfolios.... and generally weren't super impressed. Here's why:

- Industry attendees said that a student's portfolio should reflect the position or career track that the students are looking to get into. There should be evidence in the portfolio that the student tried on their own to investigate, explore, and work with technologies and code that interests them. Posting student projects (with every student having very similar projects) doesn't help anyone in making a hiring decision.

- Prospective employers are not just interested in the technologies that students used, but also:

- Thought processes that the students went through in completing their projects - why they chose to make certain decisions (for example, use a canned WordPress theme instead of design/develop their own)

- Challenges students encountered in design and development and how they overcame those problems in their journey to the completed project - Employers are interested in soft skills like perseverance, the ability to google a problem and uncover a solution on your own, the ability to asking for help when you are stumped (not before you've tried googling the problem)... etc.

- Is the student willing to go the extra mile, put in some extra effort and explore interesting tools and technologies outside the classroom? The industry was quite clear that this was one of the main things they were looking for - a motivated individual with the right attitude. This kind of motivation should be clearly seen in a student's portfolio.

There was also some suggestions during the focus group that students should be given the opportunity to provide constructive feedback to their peers on their portfolios. A good critique is a gift. Can students accept constructive feedback and use it to improve? Could providing peer critiques give them a better perspective and more experience with what is good (design, code, functionality, etc.) versus what is not good. We thought so.

Finally, here's a couple of links to student portfolios that 'make the grade' so to speak, in my humble opinion. One was a student of mine this past semester, the other is a current student at the University of Calgary:

- Gary S. Jennings (former SAIT student)

- Carrie Mah (U of C student)

Subscribe to:

Posts (Atom)